Xiaomi MiMo-7B: Open-Source AI for Reasoning & Coding

Xiaomi MiMo-7B: A 7-Billion Parameter Revolution in Open-Source AI

Xiaomi’s entry into the AI arena in April 2025 wasn’t just a splash; it was a tidal wave. With the launch of MiMo-7B, their first open-source large language model (LLM), they challenged established giants. This isn’t your average conversational AI; MiMo-7B is specifically designed for advanced reasoning tasks in mathematics, programming, and logic. This article dives deep into MiMo-7B’s features, innovations, performance benchmarks, and potential applications, highlighting how Xiaomi is redefining what a powerful, efficient AI model can be. Get ready to be impressed – and maybe even a little surprised.

1. What is MiMo-7B? Understanding Xiaomi’s AI Breakthrough

MiMo-7B, developed by Xiaomi’s Big Model Core Team, isn’t just another LLM. While many focus on general conversation, MiMo-7B’s core strength lies in advanced reasoning. Think tackling complex mathematical problems, generating clean code in multiple programming languages, and solving intricate logic puzzles – all without needing the massive parameter counts of some competitors.

The team cleverly focused on optimizing performance with a relatively compact 7 billion parameters. This makes it surprisingly efficient, outperforming some much larger models in key benchmarks. Xiaomi’s released four open-source (Apache 2.0) versions, all available on Hugging Face and GitHub:

- MiMo-7B-Base: The foundational model, trained from scratch with a strong emphasis on logical patterns. Think of it as the blank canvas.

- MiMo-7B-SFT: This version’s been fine-tuned with supervised learning, leading to increased accuracy and more reliable results. Think of it as adding the initial brushstrokes.

- MiMo-7B-RL-Zero: Trained using reinforcement learning (RL) starting from the base model. It learns from its mistakes and improves its problem-solving strategy.

- MiMo-7B-RL: The most advanced version, further refined with RL based on the SFT model. This is the finished masterpiece, showing the best performance in both math and code tasks.

https://github.com/XiaomiMiMo/MiMo

https://www.gizmochina.com/2025/05/02/xiaomi-launches-mimo-7b-its-first-open-source-llm-for-reasoning-and-coding/

https://www.gadgets360.com/ai/news/xiaomi-mimo-reasoning-ai-model-launch-size-8296696

2. Key Innovations Behind MiMo-7B’s Success

MiMo-7B’s success isn’t just about luck; it’s a testament to Xiaomi’s innovative approach to both pre-training and fine-tuning. They’ve broken the mold, proving that massive models aren’t always necessary for complex tasks.

2.1. Pre-training: Focusing on Reasoning Density

Xiaomi didn’t just throw data at the problem; they meticulously crafted a data pipeline that prioritized reasoning patterns:

High-Quality Data: MiMo-7B-Base was trained on a massive 25 trillion tokens, but a key aspect was the focused curation. A whopping 200 billion tokens were explicitly focused on reasoning. They used a three-stage data mixing strategy, gradually increasing the proportion of mathematical and programming content to 70%, complemented by 10% synthetic problem-solving data. It’s all about quality, not just quantity.

Multi-Token Prediction (MTP): Instead of just predicting the next word, MiMo-7B predicts multiple tokens simultaneously. This improves contextual understanding and speeds up inference. Think of it as reading ahead.

Multidimensional Filtering: Xiaomi refined their text extraction tools and applied filters to concentrate on examples rich in logic. This ensures the model was exposed to complex patterns from the very beginning. A focus on effective training rather than brute force.

https://github.com/XiaomiMiMo/MiMo

https://www.marktechpost.com/2025/05/01/xiaomi-introduced-mimo-7b-a-compact-language-model-that-outperforms-larger-models-in-mathematical-and-code-reasoning-through-rigorous-pre-training-and-reinforcement-learning/

https://apidog.com/blog/mimo-7b-rl/

2.2. Fine-tuning: Innovative Reinforcement Learning

Reinforcement learning (RL) is the secret sauce behind MiMo-7B-RL’s exceptional performance. Xiaomi implemented several advanced techniques:

Curated Dataset: They used 130,000 rigorously verified math and programming problems, checked by rule-based systems to ensure accuracy. Each problem was also difficulty-rated, optimizing the training process.

Test Difficulty Driven Reward: To solve the problem of “sparse rewards” in complex tasks, they introduced a system that assigns more granular scores to coding problems, rewarding partial solutions. This makes the learning process much more efficient.

Data Resampling: To stabilize training, they implemented a strategy of resampling easier problems, improving sampling efficiency and policy updates in the final RL stages. This keeps the training stable and prevents overfitting.

Seamless Rollout Engine: This custom-developed engine increased training speed by 2.29 times and validation speed by 1.96 times, reducing GPU downtime and optimizing inference. Efficiency is key.

https://github.com/XiaomiMiMo/MiMo

https://www.fonearena.com/blog/452648/xiaomi-mimo-open-source-ai-model.html

https://arxiv.org/abs/2505.07608

2.3. MiMo-VL-7B: Stepping into Multimodal Reasoning

Xiaomi didn’t stop at text; they also launched MiMo-VL-7B, a vision-language model (VLM) combining reasoning with visual processing. This model is a significant leap forward:

- It uses a high-resolution ViT encoder to preserve visual detail.

- An efficient MLP projector aligns vision and language seamlessly.

- A four-stage training process, including projector warm-up, vision-language alignment, multimodal pre-training, and supervised fine-tuning.

- A Mixed On-policy Reinforcement Learning (MORL) framework integrates reward signals for perceptual accuracy, logical reasoning, and human preferences.

MiMo-VL-7B-RL achieved the highest Elo score among open-source vision-language models, surpassing models with up to 72 billion parameters (based on internal evaluations with GPT-4o).

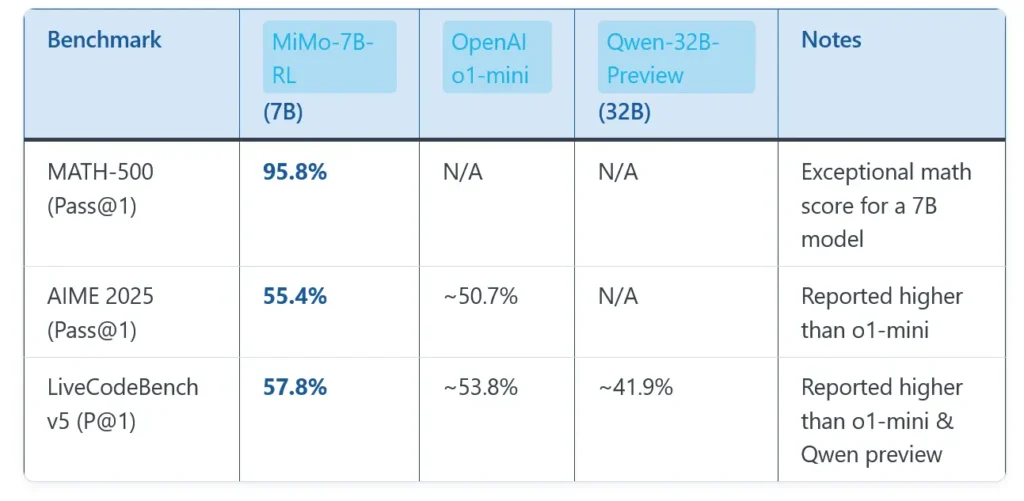

3. MiMo-7B’s Performance: Benchmarks Speak Volumes

MiMo-7B-RL has consistently outperformed larger models in specific tasks:

Mathematics:

- MATH-500: 95.8% accuracy on the first pass (Pass@1).

- AIME 2024: 68.2% Pass@1 (average of 32 runs).

- AIME 2025: 55.4% Pass@1, surpassing DeepSeek R1 (79.8%) after scaling the SFT dataset to 6 million instances.

Programming:

- LiveCodeBench v5: 57.8% Pass@1.

- LiveCodeBench v6: 49.3% Pass@1.

General Reasoning:

- GPQA Diamond: 54.4% Pass@1.

- IF-Eval (Prompt Strict): 61.0% Pass@1.

These results showcase MiMo-7B’s ability to compete with models like OpenAI o1-mini and Alibaba Qwen-32B despite its significantly smaller size. While scores on general knowledge benchmarks like MMLU-Pro are respectable (mid-to-high 50% range), they reflect its specialized focus on reasoning.

https://www.gizmochina.com/2025/05/02/xiaomi-launches-mimo-7b-its-first-open-source-llm-for-reasoning-and-coding/

https://www.fonearena.com/blog/452648/xiaomi-mimo-open-source-ai-model.html

https://apidog.com/blog/mimo-7b-rl/

4. Applications of MiMo-7B: Real-World Impact

MiMo-7B’s compact size and efficiency make it ideal for various applications, especially on resource-constrained devices:

- Education: Step-by-step math problem solving and programming tutorials, improving learning outcomes.

- Software Development: Automating code debugging, algorithm optimization, and unit test generation.

- Research: Supporting automated theorem proving, logical analysis, and data-driven hypothesis testing.

- Edge Computing: Running reasoning tasks on IoT devices, smartphones, and other resource-limited systems.

- Enterprise Automation: Applications in finance, healthcare, and logistics where logical reasoning is crucial.

Integrating MiMo-7B into Xiaomi’s ecosystem, including HyperOS and the Xiao AI assistant, promises to boost the intelligence of smartphones, smart homes, and electric vehicles like the Xiaomi SU7.

https://ai-mimo.com/

https://firexcore.com/blog/xiaomi-mimo-7b/

5. Open Source: Empowering the Community

Xiaomi’s decision to release MiMo-7B under the Apache 2.0 license is a game-changer. This allows for unrestricted use, modification, and commercial application. The models and documentation are readily available on:

- Hugging Face: Repositories like XiaomiMiMo/MiMo-7B-RL and MiMo-VL-7B-RL.

- GitHub: Checkpoints for all versions (Base, SFT, RL-Zero, RL).

This fosters community collaboration and positions Xiaomi as a key player in democratizing AI. Developers and enthusiasts can experiment, contribute improvements, and accelerate innovation.

https://github.com/XiaomiMiMo/MiMo

https://www.gizmochina.com/2025/05/02/xiaomi-launches-mimo-7b-its-first-open-source-llm-for-reasoning-and-coding/

6. Key Differences with Other Models

| Feature | MiMo-7B-RL | OpenAI o1-mini | Alibaba Qwen-32B |

|---|---|---|---|

| Parameters | 7 Billion | Not specified (estimated >20B) | 32 Billion |

| Math Reasoning | 95.8% (MATH-500), 68.2% (AIME 2024) | Comparable in AIME 2024 | Inferior in AIME 2024 |

| Code Generation | 57.8% (LiveCodeBench v5) | Comparable in LiveCodeBench | Inferior in LiveCodeBench |

| Model Size | Compact, suitable for edge computing | Larger, requires more resources | Larger, requires more resources |

| License | Apache 2.0 (Open Source) | Proprietary | Proprietary |

| Training Data | 25 Trillion Tokens, Optimized RL | Not disclosed | Not disclosed |

https://www.gizmochina.com/2025/05/02/xiaomi-launches-mimo-7b-its-first-open-source-llm-for-reasoning-and-coding/

https://www.fonearena.com/blog/452648/xiaomi-mimo-open-source-ai-model.html

https://www.scmp.com/tech/big-tech/article/3308483/smartphone-giant-xiaomi-unveils-ai-model-joining-fierce-competition-china

7. Challenges and Opportunities

Challenges:

- Competition: While MiMo-7B excels in specific tasks, it lags behind leading models like Google Gemini 2.0 in general reasoning.

- Brand Perception: Xiaomi needs to overcome its primarily hardware-focused image to establish itself as an AI leader.

- Scalability: Maintaining performance in real-world applications will require continuous improvements in training and infrastructure.

Opportunities:

- Integrated Ecosystem: Integrating MiMo-7B into Xiaomi devices (smartphones, IoT, vehicles) opens possibilities for connected experiences.

- Open-Source Community: The open-source release fosters global collaboration, attracting talent and accelerating development.

- Emerging Markets: MiMo-7B’s efficiency makes it ideal for regions with limited technological infrastructure.

8. Conclusion

MiMo-7B marks a significant milestone in Xiaomi’s AI strategy. Its focus on logical reasoning, compact size, and open-source accessibility challenge industry norms. From assisting students with math to optimizing software development, MiMo-7B has the potential to transform various sectors. As Xiaomi continues integrating it and the global community adopts it, MiMo-7B is poised to be a cornerstone in AI’s evolution.

1 thought on “Xiaomi MiMo-7B: Open-Source AI for Reasoning & Coding”